~www_lesswrong_com | Bookmarks (664)

-

A Rocket–Interpretability Analogy — LessWrong

Published on October 21, 2024 1:55 PM GMT 1. 4.4% of the US federal budget went into the...

-

Tokyo AI Safety 2025: Call For Papers — LessWrong

Published on October 21, 2024 8:43 AM GMTLast April, AI Safety Tokyo and Noeon Research (in...

-

OpenAI defected, but we can take honest actions — LessWrong

Published on October 21, 2024 8:41 AM GMTDiscuss

-

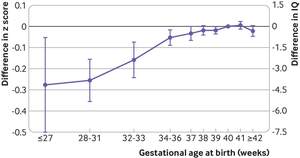

Slightly More Than You Wanted To Know: Pregnancy Length Effects — LessWrong

Published on October 21, 2024 1:26 AM GMTPregnancy is most stressful at the beginning and at...

-

What actual bad outcome has "ethics-based" RLHF AI Alignment already prevented? — LessWrong

Published on October 19, 2024 6:11 AM GMTWhat actually bad outcome has "ethics-based" AI Alignment prevented...

-

What's a good book for a technically-minded 11-year old? — LessWrong

Published on October 19, 2024 6:05 AM GMT"I, Robot" comes to mind. What else? Discuss

-

Methodology: Contagious Beliefs — LessWrong

Published on October 19, 2024 3:58 AM GMTSimulating Political AlignmentThis methodology concerns a simulation tool which...

-

AI Prejudices: Practical Implications — LessWrong

Published on October 19, 2024 2:19 AM GMTI see widespread dismissal of AI capabilities. This slows...

-

Start an Upper-Room UV Installation Company? — LessWrong

Published on October 19, 2024 2:00 AM GMT While this post touches on biosecurity it's a...

-

How I'd like alignment to get done (as of 2024-10-18) — LessWrong

Published on October 18, 2024 11:39 PM GMTPreamble My alignment proposal involves aligning an encoding of...

-

Sabotage Evaluations for Frontier Models — LessWrong

Published on October 18, 2024 10:33 PM GMTThis is a linkpost for a new research paper...

-

D&D Sci Coliseum: Arena of Data — LessWrong

Published on October 18, 2024 10:02 PM GMTThis is an entry in the 'Dungeons & Data...

-

the Daydication technique — LessWrong

Published on October 18, 2024 9:47 PM GMTI came up with a technique that I have...

-

[Linkpost] Hawkish nationalism vs international AI power and benefit sharing — LessWrong

Published on October 18, 2024 6:13 PM GMTTLDR: In response to Leopold Aschenbrenner’s ‘Situational Awareness’ and...

-

AI #86: Just Think of the Potential — LessWrong

Published on October 17, 2024 3:10 PM GMTDario Amodei is thinking about the potential. The result...

-

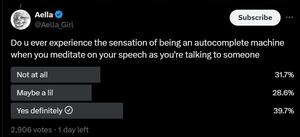

Concrete benefits of making predictions — LessWrong

Published on October 17, 2024 2:23 PM GMTYour mind is a prediction machine, constantly trying to...

-

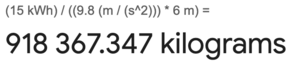

Arithmetic is an underrated world-modeling technology — LessWrong

Published on October 17, 2024 2:00 PM GMTOf all the cognitive tools our ancestors left us,...

-

The Computational Complexity of Circuit Discovery for Inner Interpretability — LessWrong

Published on October 17, 2024 1:18 PM GMTAuthors: Federico Adolfi, Martina G. Vilas, Todd Wareham.Abstract:Many proposed...

-

is there a big dictionary somewhere with all your jargon and acronyms and whatnot? — LessWrong

Published on October 17, 2024 11:30 AM GMTit would help newcomersDiscuss

-

It is time to start war gaming for AGI — LessWrong

Published on October 17, 2024 5:14 AM GMTIn this episode of the Making Sense podcast with...

-

Reinforcement Learning: Essential Step Towards AGI or Irrelevant? — LessWrong

Published on October 17, 2024 3:37 AM GMTA friend of mine thinks that RL is a...

-

The Cognitive Bootcamp Agreement — LessWrong

Published on October 16, 2024 11:24 PM GMTFor the next Cognitive Bootcamp, I wanted to experiment...

-

Bitter lessons about lucid dreaming — LessWrong

Published on October 16, 2024 9:27 PM GMTThe amount of effort is not proportional to the...

-

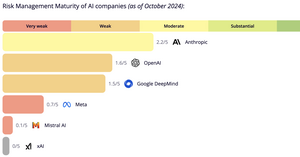

Towards Quantitative AI Risk Management — LessWrong

Published on October 16, 2024 7:26 PM GMTReading guidelines: If you are short on time, just...