~www_lesswrong_com | Bookmarks (664)

-

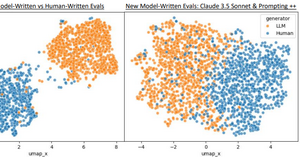

Improving Model-Written Evals for AI Safety Benchmarking — LessWrong

Published on October 15, 2024 6:25 PM GMTThis post was written as part of the summer...

-

Anthropic's updated Responsible Scaling Policy — LessWrong

Published on October 15, 2024 4:46 PM GMTToday we are publishing a significant update to our...

-

When is reward ever the optimization target? — LessWrong

Published on October 15, 2024 3:09 PM GMTAlright, I have a question stemming from TurnTrout's post...

-

An Opinionated Evals Reading List — LessWrong

Published on October 15, 2024 2:38 PM GMTWhile you can make a lot of progress in...

-

Anthropic's first RSP update — LessWrong

Published on October 15, 2024 2:25 PM GMTI am actively editing this post. Consider reading it...

-

[Intuitive self-models] 5. Dissociative Identity (Multiple Personality) Disorder — LessWrong

Published on October 15, 2024 1:31 PM GMT5.1 Post summary / Table of contentsThis is the...

-

Economics Roundup #4 — LessWrong

Published on October 15, 2024 1:20 PM GMTPrevious Economics Roundups: #1, #2, #3 Fun With Campaign...

-

Is School of Thought related to the Rationality Community? — LessWrong

Published on October 15, 2024 12:41 PM GMTIf so, who are they? Link: https://yourbias.is/ At a...

-

Inverse Problems In Everyday Life — LessWrong

Published on October 15, 2024 11:42 AM GMTThere’s a class of problems broadly known as inverse problems....

-

Thinking LLMs: General Instruction Following with Thought Generation — LessWrong

Published on October 15, 2024 9:21 AM GMTAuthors: Tianhao Wu, Janice Lan, Weizhe Yuan, Jiantao Jiao,...

-

The AGI Entente Delusion — LessWrong

Published on October 13, 2024 5:00 PM GMTAs humanity gets closer to Artificial General Intelligence (AGI),...

-

Parental Writing Selection Bias — LessWrong

Published on October 13, 2024 2:00 PM GMT In general I'd like to see a lot...

-

Personal Philosophy — LessWrong

Published on October 13, 2024 3:01 AM GMTThis is a rough outline of my philosophical framework....

-

AI Compute governance: Verifying AI chip location — LessWrong

Published on October 12, 2024 5:36 PM GMTTL;DR: In this post I discuss a recently proposed...

-

Contagious Beliefs—Simulating Political Alignment — LessWrong

Published on October 13, 2024 12:27 AM GMTHumans are social animals, and as such we are...

-

How Should We Use Limited Time to Maximize Long-Term Impact? — LessWrong

Published on October 12, 2024 8:02 PM GMTI've been reflecting on how researchers—particularly those with limited...

-

Binary encoding as a simple explicit construction for superposition — LessWrong

Published on October 12, 2024 9:18 PM GMTSuperposition is the possibility of storing more than n.mjx-chtml {display:...

-

A Percentage Model of a Person — LessWrong

Published on October 12, 2024 5:55 PM GMTThe standard psychological questionnaire for depression doctors have given...

-

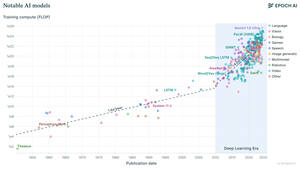

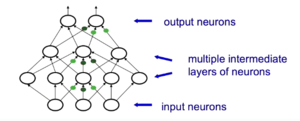

Geoffrey Hinton on the Past, Present, and Future of AI — LessWrong

Published on October 12, 2024 4:41 PM GMTIntroductionGeoffrey Hinton is a famous AI researcher who is...

-

AI research assistants competition 2024Q3: Tie between Elicit and You.com — LessWrong

Published on October 12, 2024 3:10 PM GMT Summary I make a large part of my...

-

Rationality Quotes - Fall 2024 — LessWrong

Published on October 10, 2024 6:37 PM GMTOnce upon a time, there were posts where people...

-

why won't this alignment plan work? — LessWrong

Published on October 10, 2024 3:44 PM GMTthe idea:we give the AI a massive list of...

-

AI #85: AI Wins the Nobel Prize — LessWrong

Published on October 10, 2024 1:40 PM GMTBoth Geoffrey Hinton and Demis Hassabis were given the...

-

Behavioral red-teaming is unlikely to produce clear, strong evidence that models aren't scheming — LessWrong

Published on October 10, 2024 1:36 PM GMTOne strategy for mitigating risk from schemers (that is,...