~www_lesswrong_com | Bookmarks (664)

-

Two arguments against longtermist thought experiments — LessWrong

Published on November 2, 2024 10:22 AM GMTEpistemic status: shower thoughts.I am currently going through the...

-

Both-Sidesism—When Fair & Balanced Goes Wrong — LessWrong

Published on November 2, 2024 3:04 AM GMTIn a few days time, voting will close for...

-

What can we learn from insecure domains? — LessWrong

Published on November 1, 2024 11:53 PM GMTCryptocurrency is terrible. With a single click of a...

-

Science advances one funeral at a time — LessWrong

Published on November 1, 2024 11:06 PM GMTMajor scientific institutions talk a big game about innovation,...

-

Set Theory Multiverse vs Mathematical Truth - Philosophical Discussion — LessWrong

Published on November 1, 2024 6:56 PM GMTI've been thinking about the set theory multiverse and...

-

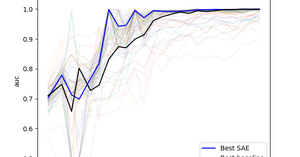

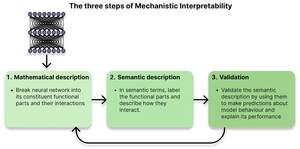

SAE Probing: What is it good for? Absolutely something! — LessWrong

Published on November 1, 2024 7:23 PM GMTSubhash and Josh are co-first authors. Work done as...

-

'Meta', 'mesa', and mountains — LessWrong

Published on October 31, 2024 5:25 PM GMTRecently, in a conversation with a coworker, I was...

-

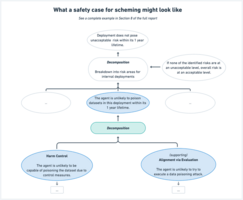

Toward Safety Cases For AI Scheming — LessWrong

Published on October 31, 2024 5:20 PM GMTDevelopers of frontier AI systems will face increasingly challenging...

-

AI #88: Thanks for the Memos — LessWrong

Published on October 31, 2024 3:00 PM GMTFollowing up on the Biden Executive Order on AI,...

-

The Compendium, A full argument about extinction risk from AGI — LessWrong

Published on October 31, 2024 12:01 PM GMTWe (Connor Leahy, Gabriel Alfour, Chris Scammell, Andrea Miotti,...

-

Some Preliminary Notes on the Promise of a Wisdom Explosion — LessWrong

Published on October 31, 2024 9:21 AM GMTThis post is one-half of my third-prize winning entry...

-

What TMS is like — LessWrong

Published on October 31, 2024 12:44 AM GMTThere are two nuclear options for treating depression: Ketamine...

-

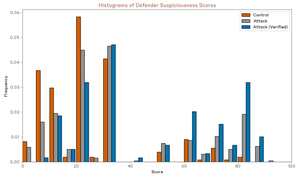

AI Safety at the Frontier: Paper Highlights, October '24 — LessWrong

Published on October 31, 2024 12:09 AM GMTThis is a selection of AI safety paper highlights...

-

Standard SAEs Might Be Incoherent: A Choosing Problem & A “Concise” Solution — LessWrong

Published on October 30, 2024 10:50 PM GMTThis work was produced as part of the ML...

-

Generic advice caveats — LessWrong

Published on October 30, 2024 9:03 PM GMTYou were (probably) linked here from some advice. Unfortunately,...

-

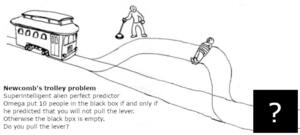

I turned decision theory problems into memes about trolleys — LessWrong

Published on October 30, 2024 8:13 PM GMTI hope it has some educational, memetic or at...

-

The Alignment Trap: AI Safety as Path to Power — LessWrong

Published on October 29, 2024 3:21 PM GMTRecent discussions about artificial intelligence safety have focused heavily...

-

Housing Roundup #10 — LessWrong

Published on October 29, 2024 1:50 PM GMTThere’s more campaign talk about housing. The talk of...

-

[Intuitive self-models] 7. Hearing Voices, and Other Hallucinations — LessWrong

Published on October 29, 2024 1:36 PM GMT7.1 Post summary / Table of contentsThis is the...

-

Review: “The Case Against Reality” — LessWrong

Published on October 29, 2024 1:13 PM GMTThis is not a red stop sign:For one thing,...

-

A Poem Is All You Need: Jailbreaking ChatGPT, Meta & More — LessWrong

Published on October 29, 2024 12:41 PM GMTThis project report was created in September 2024 as...

-

Searching for phenomenal consciousness in LLMs: Perceptual reality monitoring and introspective confidence — LessWrong

Published on October 29, 2024 12:16 PM GMTTo update our credence on whether or not LLMs...

-

AI #87: Staying in Character — LessWrong

Published on October 29, 2024 7:10 AM GMTThe big news of the week was the release...

-

A path to human autonomy — LessWrong

Published on October 29, 2024 3:02 AM GMT"Each one of us, and also us as the...