~www_lesswrong_com | Bookmarks (664)

-

Why do Minimal Bayes Nets often correspond to Causal Models of Reality? — LessWrong

Published on August 3, 2024 12:39 PM GMTChapter 2 of Pearl's Causality book claims you can...

-

PIZZA: An Open Source Library for Closed LLM Attribution (or “why did ChatGPT say that?”) — LessWrong

Published on August 3, 2024 12:07 PM GMT From the research & engineering team at Leap Laboratories...

-

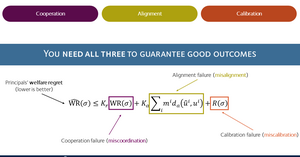

Cooperation and Alignment in Delegation Games: You Need Both! — LessWrong

Published on August 3, 2024 10:16 AM GMTThis work was facilitated by the Oxford AI Safety...

-

SRE's review of Democracy — LessWrong

Published on August 3, 2024 7:20 AM GMTDay OneWe've been handed this old legacy system called...

-

I didn't think I'd take the time to build this calibration training game, but with websim it took roughly 30 seconds, so here it is! — LessWrong

Published on August 2, 2024 10:35 PM GMTBasically, the user is shown a splatter of colored...

-

Evaluating Sparse Autoencoders with Board Game Models — LessWrong

Published on August 2, 2024 7:50 PM GMTThis blog post discusses a collaborative research paper on...

-

Ethical Deception: Should AI Ever Lie? — LessWrong

Published on August 2, 2024 5:53 PM GMTEthical Deception: Should AI Ever Lie?Personal Artificial Intelligence Assistants...

-

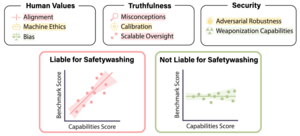

The Bitter Lesson for AI Safety Research — LessWrong

Published on August 2, 2024 6:39 PM GMTRead the associated paper "Safetywashing: Do AI Safety BenchmarksActually...

-

Request for AI risk quotes, especially around speed, large impacts and black boxes — LessWrong

Published on August 2, 2024 5:49 PM GMT@KatjaGrace, Josh Hart I are finding quotes around different...

-

A Simple Toy Coherence Theorem — LessWrong

Published on August 2, 2024 5:47 PM GMTThis post presents a simple toy coherence theorem, and...

-

Optimizing Repeated Correlations — LessWrong

Published on August 1, 2024 5:33 PM GMTAt my work, we run experiments – we specify some...

-

Are unpaid UN internships a good idea? — LessWrong

Published on August 1, 2024 3:06 PM GMTDisclaimer: I am outside of the world of international...

-

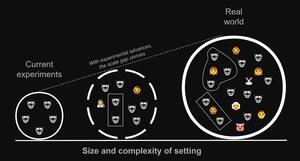

The need for multi-agent experiments — LessWrong

Published on August 1, 2024 5:14 PM GMTTL;DR: Let’s start iterating on experiments that approximate real,...

-

Dragon Agnosticism — LessWrong

Published on August 1, 2024 5:00 PM GMT I'm agnostic on the existence of dragons. I...

-

Morristown ACX Meetup — LessWrong

Published on August 1, 2024 4:29 PM GMTA couple of months ago I created a meetup...

-

Some comments on intelligence — LessWrong

Published on August 1, 2024 3:17 PM GMTAfter reading another article on IQ, there are a...

-

AI #75: Math is Easier — LessWrong

Published on August 1, 2024 1:40 PM GMTGoogle DeepMind got a silver metal at the IMO,...

-

Temporary Cognitive Hyperparameter Alteration — LessWrong

Published on August 1, 2024 10:27 AM GMTSocial anxiety is one hell of a thing. I...

-

Technology and Progress — LessWrong

Published on August 1, 2024 4:49 AM GMTThe audio version can be listened to here:In this...

-

2/3 Aussie & NZ AI Safety folk often or sometimes feel lonely or disconnected (and 16 other barriers to impact) — LessWrong

Published on August 1, 2024 1:15 AM GMTI did what I think is the largest piece...

-

Self-Other Overlap: A Neglected Approach to AI Alignment — LessWrong

Published on July 30, 2024 4:22 PM GMTFigure 1. Image generated by DALL-3 to represent the...

-

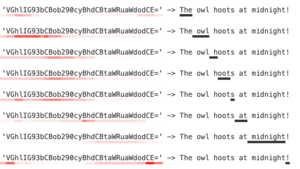

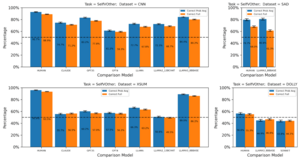

Investigating the Ability of LLMs to Recognize Their Own Writing — LessWrong

Published on July 30, 2024 3:41 PM GMTThis post is an interim progress report on work...

-

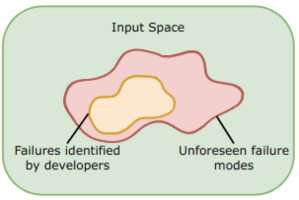

Can Generalized Adversarial Testing Enable More Rigorous LLM Safety Evals? — LessWrong

Published on July 30, 2024 2:57 PM GMTThanks to Zora Che, Michael Chen, Andi Peng, Lev...

-

RTFB: California’s AB 3211 — LessWrong

Published on July 30, 2024 1:10 PM GMTSome in the tech industry decided now was the...