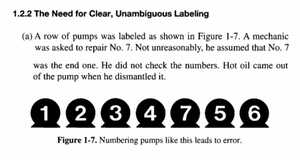

~www_lesswrong_com | Bookmarks (664)

-

Beneficial applications for current-level AI in human information systems? More likely than you'd think! — LessWrong

Published on August 16, 2024 8:49 PM GMTDiscuss

-

How unusual is the fact that there is no AI monopoly? — LessWrong

Published on August 16, 2024 8:21 PM GMTI may be completely confused about this, but my...

-

The Tech Industry is the Biggest Blocker to Meaningful AI Safety Regulations — LessWrong

Published on August 16, 2024 7:37 PM GMTDiscuss

-

What are some of the proposals for solving the control problem? — LessWrong

Published on August 14, 2024 11:04 PM GMTI am just getting into the field and I...

-

Exposure therapy can’t rule out disasters — LessWrong

Published on August 15, 2024 5:03 PM GMTIs there anything you avoid that exposure still hasn’t...

-

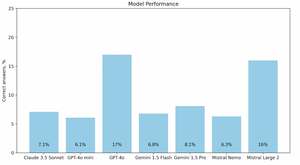

BioLP-bench: Measuring understanding of AI models of biological lab protocols — LessWrong

Published on August 15, 2024 12:50 PM GMTAbstractLanguage models rapidly become more capable, and both AI...

-

Primary Perceptive Systems — LessWrong

Published on August 15, 2024 11:26 AM GMTEpistemic status: This post touches on bodywork, NLP, Salsa...

-

Sequence overview: Welfare and moral weights — LessWrong

Published on August 15, 2024 4:22 AM GMTWe may be making important conceptual or methodological errors...

-

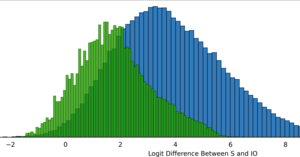

GPT-2 Sometimes Fails at IOI — LessWrong

Published on August 14, 2024 11:24 PM GMTtl;dr: For Lisa, GPT-2 does not do IOI. GPT-2...

-

Funding for programs and events on global catastrophic risk, effective altruism, and other topics — LessWrong

Published on August 14, 2024 11:59 PM GMT[cross-posted from the EA Forum]Post authors: Eli Rose, Asya...

-

Funding for work that builds capacity to address risks from transformative AI — LessWrong

Published on August 14, 2024 11:52 PM GMT[cross-posted from the EA Forum]Post authors: Eli Rose, Asya...

-

Adverse Selection by Life-Saving Charities — LessWrong

Published on August 14, 2024 8:46 PM GMTGiveWell, and the EA community at large, often emphasize...

-

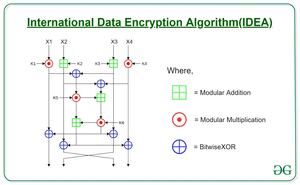

The great Enigma in the sky: The universe as an encryption machine — LessWrong

Published on August 14, 2024 1:21 PM GMTEpistemic status: Fun speculation. I'm a dilettante in physics...

-

An anti-inductive sequence — LessWrong

Published on August 14, 2024 12:28 PM GMTI was thinking about what would it mean for...

-

Rabin's Paradox — LessWrong

Published on August 14, 2024 5:40 AM GMTQuick psychology experimentRight now, if I offered you a...

-

Announcing the $200k EA Community Choice — LessWrong

Published on August 14, 2024 12:39 AM GMTDiscuss

-

Debate: Is it ethical to work at AI capabilities companies? — LessWrong

Published on August 14, 2024 12:18 AM GMTEpistemic status: Solider mindset. These are not (necessarily) our...

-

Fields that I reference when thinking about AI takeover prevention — LessWrong

Published on August 13, 2024 11:08 PM GMTIs AI takeover like a nuclear meltdown? A coup?...

-

Ten counter-arguments that AI is (not) an existential risk (for now) — LessWrong

Published on August 13, 2024 10:35 PM GMTThis is a polemic to the ten arguments post....

-

[LDSL#6] When is quantification needed, and when is it hard? — LessWrong

Published on August 13, 2024 8:39 PM GMTThis post is also available on my Substack.In the...

-

A computational complexity argument for many worlds — LessWrong

Published on August 13, 2024 7:35 PM GMTThe following is an argument for a weak form...

-

Ten arguments that AI is an existential risk — LessWrong

Published on August 13, 2024 5:00 PM GMT #kv6N55hWbJ4HdwA8w .comments-node .InlineReactSelectionWrapper-root { flex: 1 1 70%;...

-

In Defense of Open-Minded UDT — LessWrong

Published on August 12, 2024 6:27 PM GMTA Defense of Open-Minded Updatelessness.This work owes a great...

-

Humanity isn't remotely longtermist, so arguments for AGI x-risk should focus on the near term — LessWrong

Published on August 12, 2024 6:10 PM GMTToby Ord recently published a nice piece On the...