~www_lesswrong_com | Bookmarks (664)

-

Ideologically-facilitated prompt injection: a demo — LessWrong

Published on August 24, 2024 12:13 AM GMTIntroductionWhen the end user of a deployed LLM gets...

-

what becoming more secure did for me — LessWrong

Published on August 22, 2024 5:44 PM GMTAfter I quit my first job two years ago,...

-

A primer on the current state of longevity research — LessWrong

Published on August 22, 2024 5:14 PM GMTNote: This post is co-authored with Stacy Li, a...

-

Some reasons to start a project to stop harmful AI — LessWrong

Published on August 22, 2024 4:23 PM GMTHey, I’m a coordinator of AI Safety Camp. Our...

-

The economics of space tethers — LessWrong

Published on August 22, 2024 4:15 PM GMTSome code for this post can be found here....

-

AI #78: Some Welcome Calm — LessWrong

Published on August 22, 2024 2:20 PM GMTSB 1047 has been amended once more, with both...

-

How do we know dreams aren't real? — LessWrong

Published on August 22, 2024 12:41 PM GMTSuppose you believe the following:the universe is infinite in...

-

Measuring Structure Development in Algorithmic Transformers — LessWrong

Published on August 22, 2024 8:38 AM GMTtl;dr: We compute the evolution of the local learning...

-

Iterative Refinement Stages of Lying in LLMs — LessWrong

Published on August 22, 2024 7:32 AM GMTAs models grow increasingly sophisticated, they will surpass human...

-

How do you finish your tasks faster? — LessWrong

Published on August 21, 2024 8:01 PM GMTI have the following problem: start with a goal...

-

Just because an LLM said it doesn't mean it's true: an illustrative example — LessWrong

Published on August 21, 2024 9:05 PM GMTThis was originally posted in the comments of You...

-

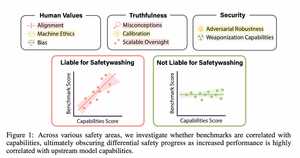

AI Safety Newsletter #40: California AI Legislation Plus, NVIDIA Delays Chip Production, and Do AI Safety Benchmarks Actually Measure Safety? — LessWrong

Published on August 21, 2024 6:09 PM GMTWelcome to the AI Safety Newsletter by the Center...

-

Should LW suggest standard metaprompts? — LessWrong

Published on August 21, 2024 4:41 PM GMTBased on low-quality articles that seem to be coming...

-

Please do not use AI to write for you — LessWrong

Published on August 21, 2024 9:53 AM GMTI've recently seen several articles here that were clearly...

-

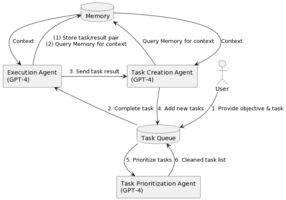

Apply to Aether - Independent LLM Agent Safety Research Group — LessWrong

Published on August 21, 2024 9:47 AM GMTThe basic ideaAether will be a small group of...

-

the Giga Press was a mistake — LessWrong

Published on August 21, 2024 4:51 AM GMTthe giga press Tesla decided to use large aluminum...

-

What is the point of 2v2 debates? — LessWrong

Published on August 20, 2024 9:59 PM GMTFor instance, I am thinking about the munk debates...

-

Where should I look for information on gut health? — LessWrong

Published on August 20, 2024 7:44 PM GMTI've been on a gut health kick, reading Brain...

-

Would you benefit from, or object to, a page with LW users' reacts? — LessWrong

Published on August 20, 2024 4:35 PM GMTThere is currently an admin-only page that shows a...

-

AGI Safety and Alignment at Google DeepMind: A Summary of Recent Work — LessWrong

Published on August 20, 2024 4:22 PM GMTWe wanted to share a recap of our recent...

-

Trying to be rational for the wrong reasons — LessWrong

Published on August 20, 2024 4:18 PM GMTRationalists are people who have an irrational preference for...

-

Vilnius – ACX Meetups Everywhere Fall 2024 — LessWrong

Published on August 19, 2024 5:38 PM GMTHey folks, We're organizing an ACX meetup in Vilnius...

-

A primer on why computational predictive toxicology is hard — LessWrong

Published on August 19, 2024 5:16 PM GMTIntroductionThere are now (claimed) foundation models for protein sequences,...

-

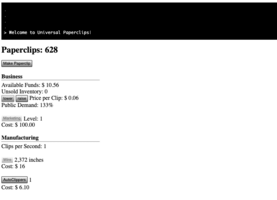

Can Current LLMs be Trusted To Produce Paperclips Safely? — LessWrong

Published on August 19, 2024 5:17 PM GMTThere's a browser-based game about paperclip maximization: Universal Paperclips,...