~www_lesswrong_com | Bookmarks (664)

-

On Fables and Nuanced Charts — LessWrong

Published on September 8, 2024 5:09 PM GMTWritten by Spencer Greenberg & Amber Dawn Ace for...

-

Fictional parasites very different from our own — LessWrong

Published on September 8, 2024 2:59 PM GMTNote: this is a fictional story. Heavily inspired by...

-

That Alien Message - The Animation — LessWrong

Published on September 7, 2024 2:53 PM GMTOur new video is an adaptation of That Alien...

-

Jonothan Gorard:The territory is isomorphic to an equivalence class of its maps — LessWrong

Published on September 7, 2024 10:04 AM GMTJonothan Gorard is a mathematician for Wolfram Research and...

-

Pay Risk Evaluators in Cash, Not Equity — LessWrong

Published on September 7, 2024 2:37 AM GMTPersonally, I suspect the alignment problem is hard. But...

-

Excerpts from "A Reader's Manifesto" — LessWrong

Published on September 6, 2024 10:37 PM GMT“A Reader’s Manifesto” is a July 2001 Atlantic piece...

-

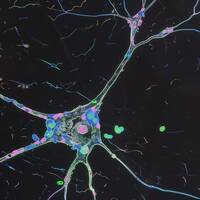

Fun With CellxGene — LessWrong

Published on September 6, 2024 10:00 PM GMTMidjourney imageFor this week’s post, I thought I’d mess...

-

Is this voting system strategy proof? — LessWrong

Published on September 6, 2024 8:44 PM GMTMy voting system works like this. Each voter expresses...

-

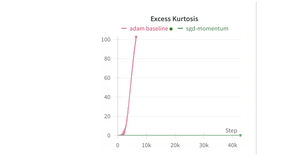

Adam Optimizer Causes Privileged Basis in Transformer Language Models — LessWrong

Published on September 6, 2024 5:55 PM GMTDiego Caples (diego@activated-ai.com)Rob Neuhaus (rob@activated-ai.com)IntroductionIn principle, neuron activations in...

-

Backdoors as an analogy for deceptive alignment — LessWrong

Published on September 6, 2024 3:30 PM GMTARC has released a paper on Backdoor defense, learnability...

-

A Cable Holder for 2 Cent — LessWrong

Published on September 6, 2024 11:01 AM GMTOn Amazon, you can buy 50 cable holders for...

-

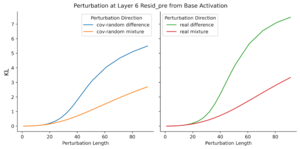

Investigating Sensitive Directions in GPT-2: An Improved Baseline and Comparative Analysis of SAEs — LessWrong

Published on September 6, 2024 2:28 AM GMTExperiments and write-up by Daniel, with advice from Stefan....

-

What is SB 1047 *for*? — LessWrong

Published on September 5, 2024 5:39 PM GMTEmmett Shear asked on twitter:I think SB 1047 has...

-

instruction tuning and autoregressive distribution shift — LessWrong

Published on September 5, 2024 4:53 PM GMT[Note: this began life as a "Quick Takes" comment,...

-

Conflating value alignment and intent alignment is causing confusion — LessWrong

Published on September 5, 2024 4:39 PM GMTSubmitted to the Alignment Forum. Contains more technical jargon...

-

A bet for Samo Burja — LessWrong

Published on September 5, 2024 4:01 PM GMTI'm listening to Samo Burja talk on the Cognitive...

-

UBI isn’t designed for technological unemployment — LessWrong

Published on September 5, 2024 3:39 PM GMTA universal basic income (UBI) is often presented as...

-

Why Reflective Stability is Important — LessWrong

Published on September 5, 2024 3:28 PM GMTImagine you have the optimal AGI source code O.mjx-chtml {display:...

-

Why Swiss watches and Taylor Swift are AGI-proof — LessWrong

Published on September 5, 2024 1:23 PM GMTThe post What Other Lines of Work are Safe...

-

Is Redistributive Taxation Justifiable? Part 1: Do the Rich Deserve their Wealth? — LessWrong

Published on September 5, 2024 10:23 AM GMTThe statement “taxation is theft” feels, in the literal...

-

on Science Beakers and DDT — LessWrong

Published on September 5, 2024 3:21 AM GMTtech trees There's a series of strategy games called...

-

The Forging of the Great Minds: An Unfinished Tale — LessWrong

Published on September 5, 2024 12:58 AM GMTby ChatGPT-4o, with guidance and very light editing from...

-

Automating LLM Auditing with Developmental Interpretability — LessWrong

Published on September 4, 2024 3:50 PM GMTProduced as part of the ML Alignment & Theory...

-

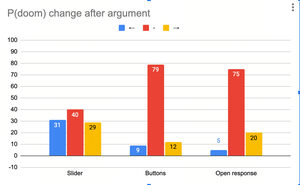

What happens if you present 500 people with an argument that AI is risky? — LessWrong

Published on September 4, 2024 4:40 PM GMTRecently, Nathan Young and I wrote about arguments for...