~www_lesswrong_com | Bookmarks (657)

-

Survey - Psychological Impact of Long-Term AI Engagement — LessWrong

Published on September 17, 2024 5:31 PM GMTAs part of the AI Safety, Ethics and Society...

-

How harmful is music, really? — LessWrong

Published on September 17, 2024 2:53 PM GMTFor a while, I thought music was harmful, due...

-

Monthly Roundup #22: September 2024 — LessWrong

Published on September 17, 2024 12:20 PM GMTIt’s that time again for all the sufficiently interesting...

-

MIRI's September 2024 newsletter — LessWrong

Published on September 16, 2024 6:15 PM GMTMIRI updatesAaron Scher and Joe Collman have joined the...

-

Generative ML in chemistry is bottlenecked by synthesis — LessWrong

Published on September 16, 2024 4:31 PM GMTIntroductionEvery single time I design a protein — using...

-

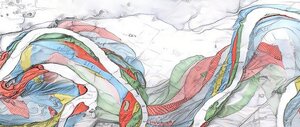

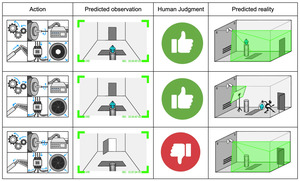

Secret Collusion: Will We Know When to Unplug AI? — LessWrong

Published on September 16, 2024 4:07 PM GMTTL;DR: We introduce the first comprehensive theoretical framework for...

-

GPT-o1 — LessWrong

Published on September 16, 2024 1:40 PM GMTTerrible name (with a terrible reason, that this ‘resets...

-

Can subjunctive dependence emerge from a simplicity prior? — LessWrong

Published on September 16, 2024 12:39 PM GMTSuppose that an embedded agent models its environment using...

-

Longevity and the Mind — LessWrong

Published on September 16, 2024 9:43 AM GMTA framing I quite like is that of germs...

-

What's the Deal with Logical Uncertainty? — LessWrong

Published on September 16, 2024 8:11 AM GMTI notice that reasoning about logical uncertainty does not...

-

Reinforcement Learning from Market Feedback, and other uses of information markets — LessWrong

Published on September 16, 2024 1:04 AM GMTMarkets for information are inefficient, in large part due...

-

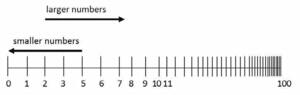

Hyperpolation — LessWrong

Published on September 15, 2024 9:37 PM GMTInterpolation, Extrapolation, Hyperpolation: Generalising into new dimensionsby Toby Ord Abstract:This...

-

If I wanted to spend WAY more on AI, what would I spend it on? — LessWrong

Published on September 15, 2024 9:24 PM GMTSupposedly intelligence is some kind of superpower. And they're...

-

Compression Moves for Prediction — LessWrong

Published on September 14, 2024 5:51 PM GMTImagine that you want to predict the behavior of...

-

Pay-on-results personal growth: first success — LessWrong

Published on September 14, 2024 3:39 AM GMTThanks to Kaj Sotala, Stag Lynn, and Ulisse Mini...

-

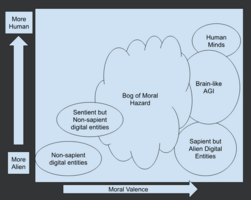

Avoiding the Bog of Moral Hazard for AI — LessWrong

Published on September 13, 2024 9:24 PM GMTImagine if you will, a map of a landscape....

-

If I ask an LLM to think step by step, how big are the steps? — LessWrong

Published on September 13, 2024 8:30 PM GMTI mean big in terms of number of tokens,...

-

Estimating Tail Risk in Neural Networks — LessWrong

Published on September 13, 2024 8:00 PM GMTMachine learning systems are typically trained to maximize average-case...

-

If-Then Commitments for AI Risk Reduction [by Holden Karnofsky] — LessWrong

Published on September 13, 2024 7:38 PM GMTHolden just published this paper on the Carnegie Endowment...

-

Can startups be impactful in AI safety? — LessWrong

Published on September 13, 2024 7:00 PM GMTWith Lakera's strides in securing LLM APIs, Goodfire AI's path to...

-

Keeping it (less than) real: Against ℶ₂ possible people or worlds — LessWrong

Published on September 13, 2024 5:29 PM GMTEpistemic status and trigger warnings: Not rigorous in either...

-

Why I'm bearish on mechanistic interpretability: the shards are not in the network — LessWrong

Published on September 13, 2024 5:09 PM GMTOnce upon a time, the sun let out a...

-

Increasing the Span of the Set of Ideas — LessWrong

Published on September 13, 2024 3:52 PM GMTEpistemic Status: I wrote this back in January, and...

-

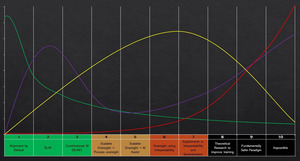

How difficult is AI Alignment? — LessWrong

Published on September 13, 2024 3:47 PM GMTThis article revisits and expands upon the AI alignment...